No Recycled WebDrivers

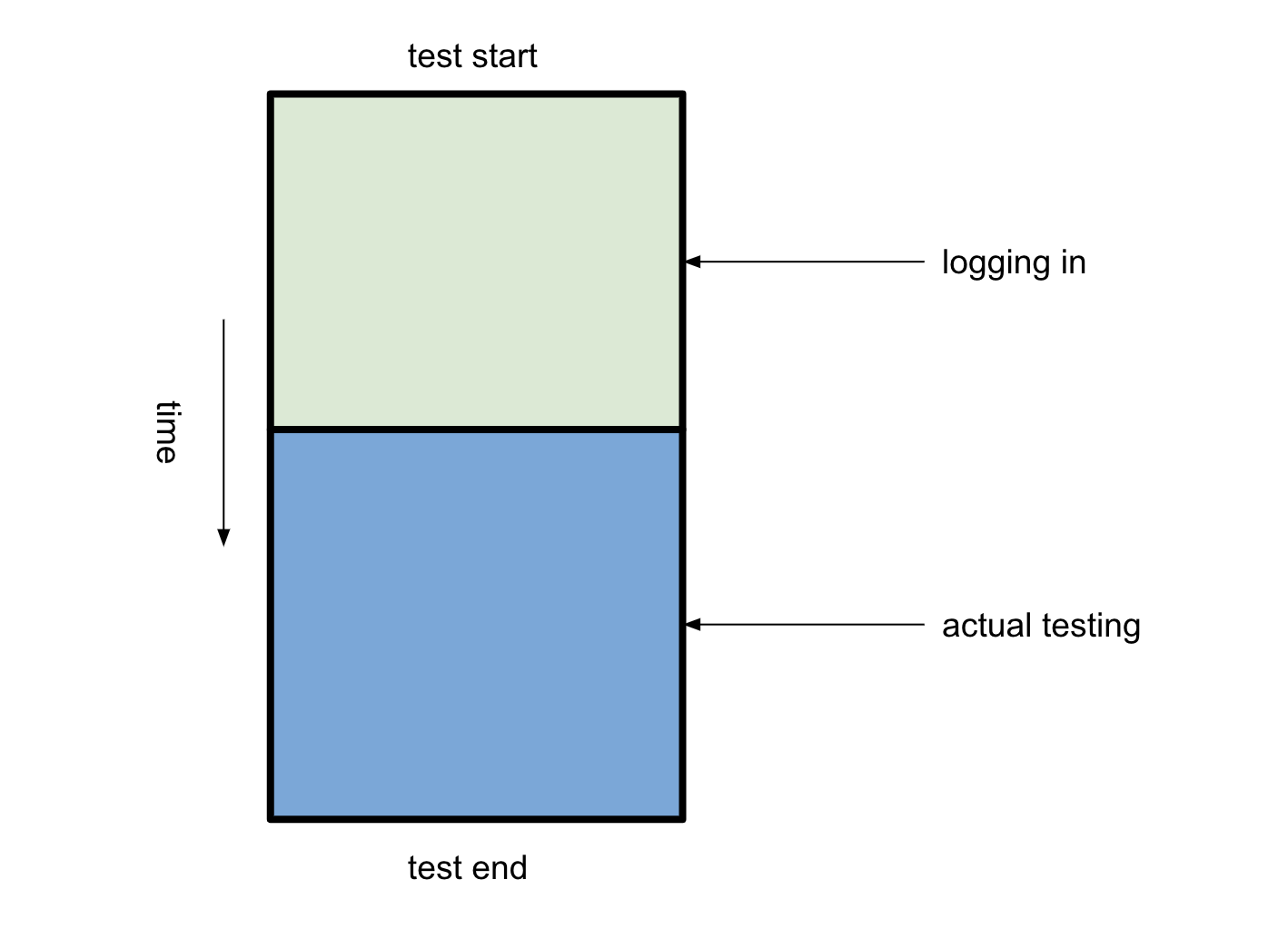

If you are beginning a test automation project using Selenium one of the first things you'll probably notice is how long it takes to actually get to the part you want to test.

Your test has to open up a fresh browser, log in, navigate to the page you want, and then and only then can the real work begin. In fact, you might recognize a pattern: 99% of your tests, and quite possibly all the remaining tests after writing the login tests, will need to do this same, repeated process of logging in to your web application.

Programmers are trained to identify duplication of code and inefficient algorithms. So when they see that they need to copy and paste the login routine to each new test there is a nearly irresistable temptation to reduce this duplication.

I can think of 2 basic approaches to this:

-

Abstract the login routine to its own function which accepts the

WebDriverinstance, performs the steps it needs log in, and returns the driver once complete. -

Write a step that is performed once at the beginning of the entire run which does the same thing. All following tests can safely assume they will be logged in.

Never do 2.

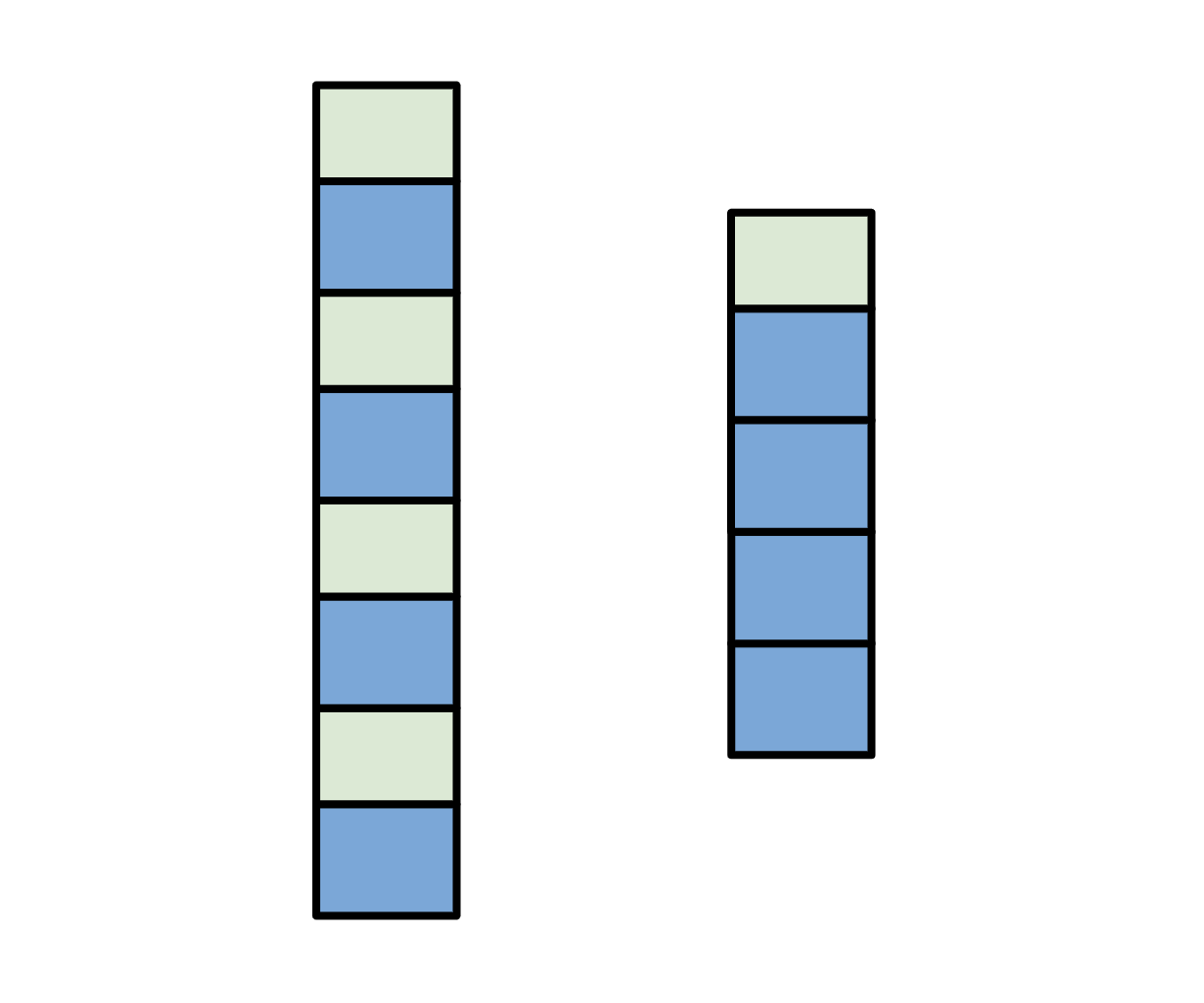

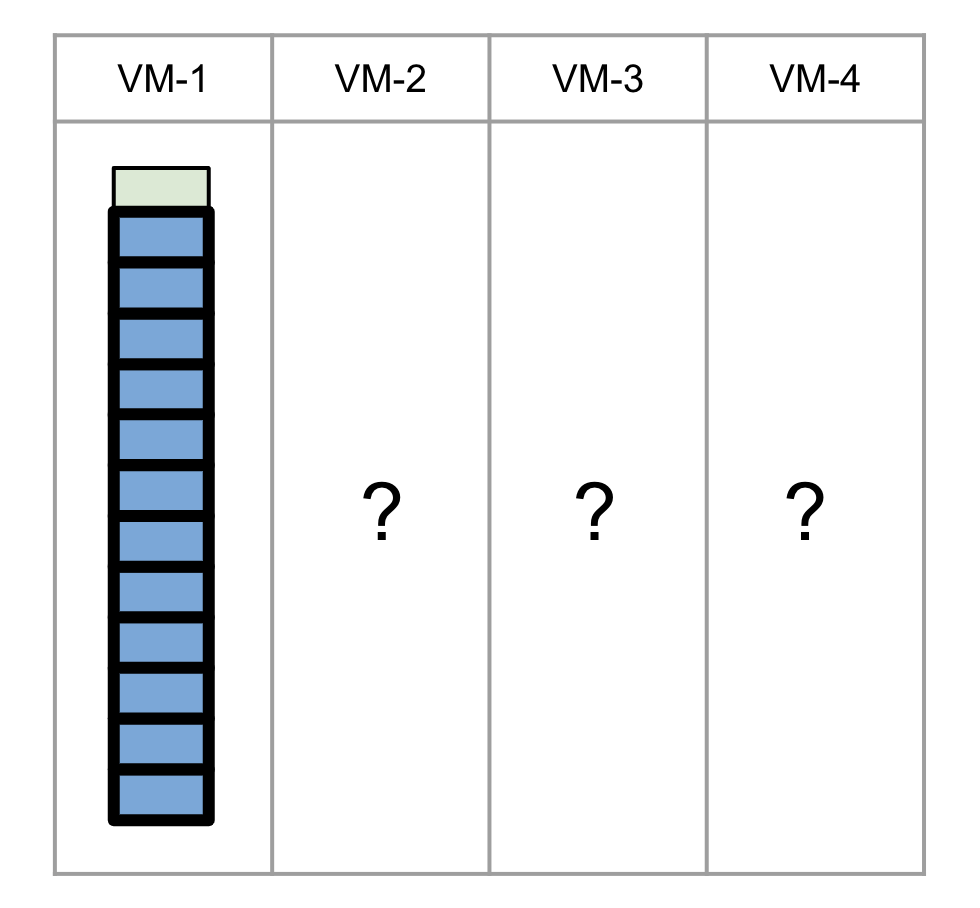

Left side is how long your suite is when each test has to log in to your application itself. The right side is when they don't. Tempting...

It's difficult to illustrate why this should be avoided because, once implemented, you're very likely to see real time gains. This is especially true for small suites of tests where only the most surface-level tests are written and the vast majority of time had been spent logging in.

The feedback loop doesn't help: the more tests you add onto this chain the more time you're saving logging in only once for all of them :|

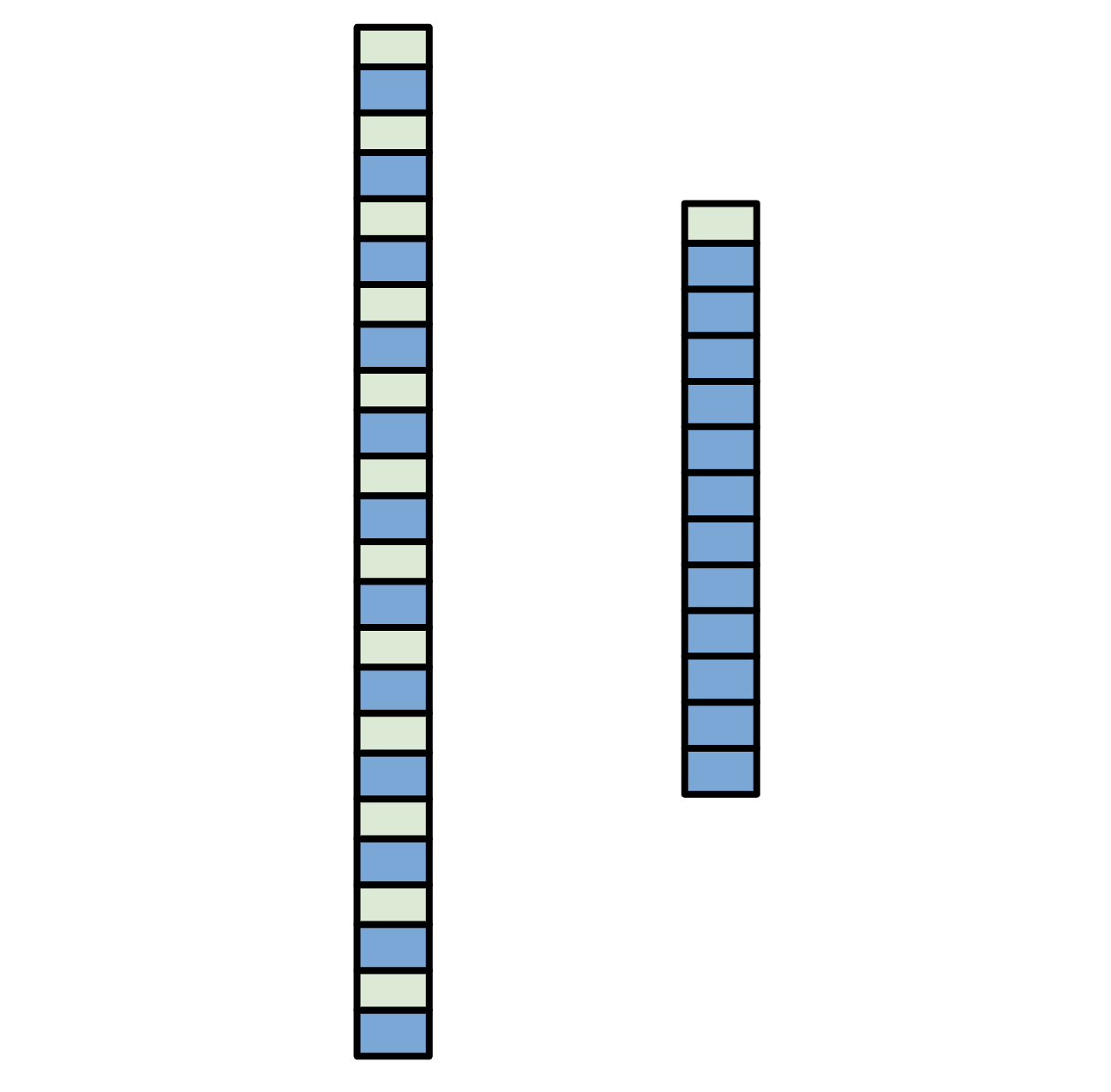

But it becomes more obvious after you reach a few test automation milestones.

There's a milestone where you'll want to execute the tests on a machine other than your own because they take too long and you probably need to work on other things while they are running without having to tip-toe around an automated browser on your desktop.

It occurs when you need to debug one of the tests in this monolithic suite.

If the buggy test happens somewhere inconvenient, like around the midpoint of

the suite, you definitely want to avoid having to run all the previous tests

before being able to observe the failing one. But the way you've set up the

tests the WebDriver is created only before one specific test so it's possible

you are already in trouble if you need to move code around to debug individual

test.

There's another phenomenon that can happen during this milestone where when you run the test by itself it passes. But when it runs within the suite it fails. This is probably due to some implicit state dependency set up by the preceding tests. The act of running previous tests turns into the "required" setup steps for following tests. It can take a while to untangle problems like this.

Tests that run together in the same order tend depend on each other to set up implicit state so that they can succeed. If this condition progresses enough it can cause the suite to almost "bind" together. It has to move as one, indivisible unit or the tests will fail. At least without some effort to untangle them.

This may still not be a terrible situation for you. However, another milestone is likely to occur where, for whatever reason, the feedback from your tests are needed for some hotfix or other urgent matter. Initially you can claim that they run on your machine so it'll take them a while. Your boss says OK and walks away. You swear you could see the gears turning in her eyes...

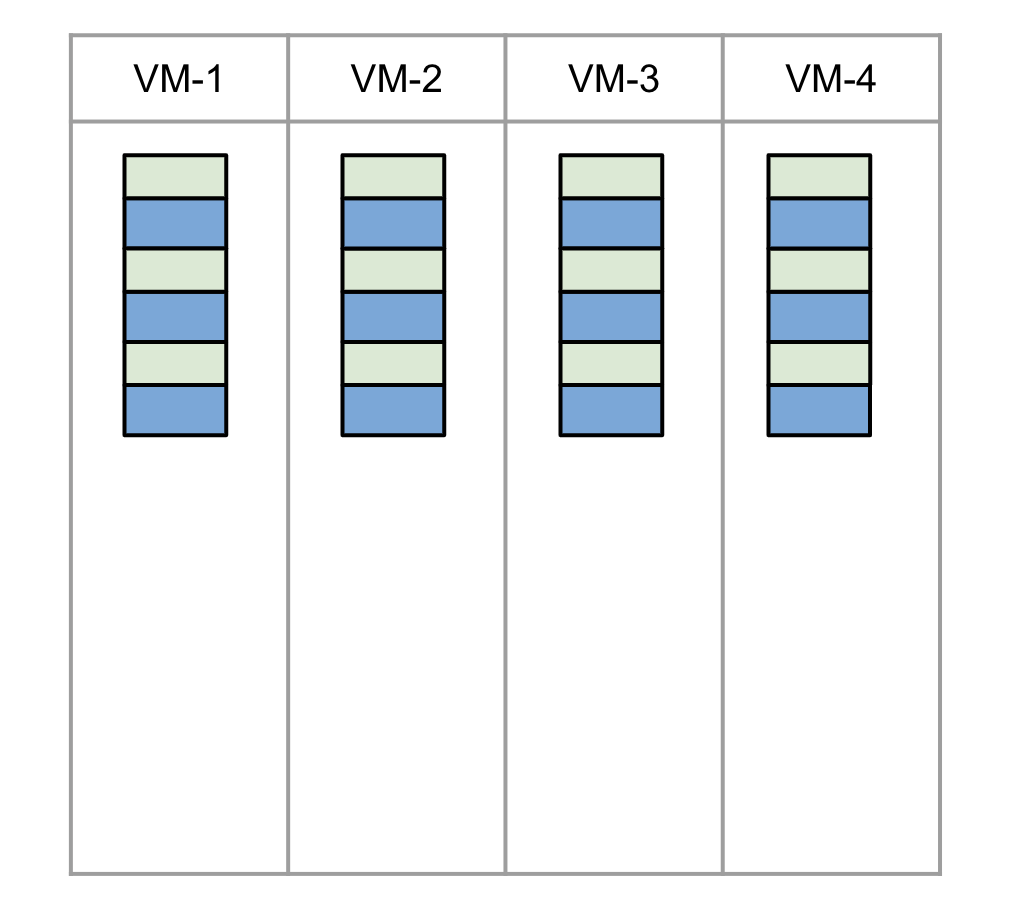

She comes back the next day and delivers 4 virtual machines ready to go to run your tests! Now the test suite should run roughly 4x quickly! Throw some hardware at it! However, since the tests cannot be broken up into smaller chunks to distribute across these machines, you cannot take advantage of the extra slots.

This is the milestone where it's easiest to see the advantages of having each

test begin with its own, fresh WebDriver instance. Before we reached this

milestone, we didn't need to distribute tests across multiple machines and

logging in for each test was slowing the suite down. But when browser automation

suites reach a critical mass they can take so long that they need to be

parallelized in order to finish them in time for a release.

To dig yourself out of this situation, you'll need to do a few things. You can

designate a new directory in your code base that's only for tests which can be

run in any order and which all create their own WebDriver instance and log

into the application themselves. You can begin automating new tests only in this

way to essentially "stop the bleeding". Gradually, you can peel tests off of your

monolith until they can all run independently. You could also start another

monolith of tests that runs on any of the other available VM's, though I'd

recommend avoiding that :)

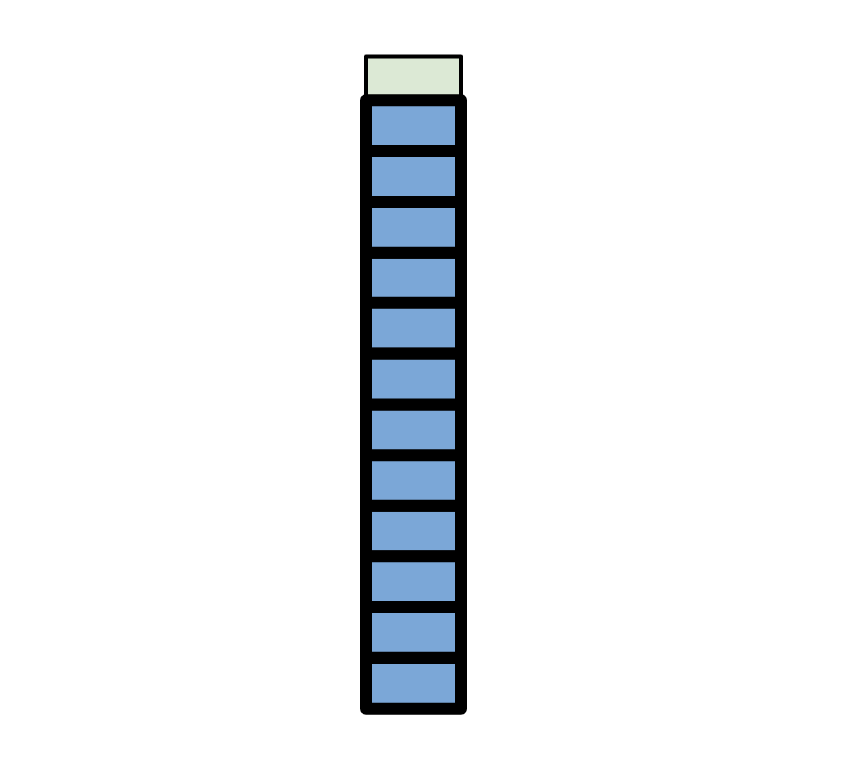

Ultimately, you're trying to achieve a setup like the following:

Each test begins with a fresh WebDriver instance.

There are still challenges with this approach especially if you're using some staging or dev environment version of your web application. In these situations, you're likely reusing concepts like users, passwords, emails, and probably resources that are specific to your web application that may be hard to generate automatically. Things get even more difficult and flaky if you don't have an API to interact with to help you CRUD the test data you need. But that's for another blog post!